Humans versus AI: An AI Detection Experiment

By: Marie Overman

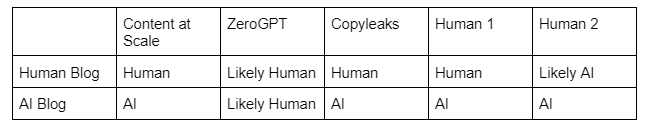

I recently wrote a blog about AI hacking and how it affects the future of cybersecurity. AI is evasive and is able to learn and adapt in ways that people cannot always predict. To test current AI detectors, we used my blog, written by a human, and an AI generated blog with the same prompt and key words, written by ChatGPT. We ran both through three different AI Content Detectors; Content at Scale, ZeroGPT, and Copyleaks.

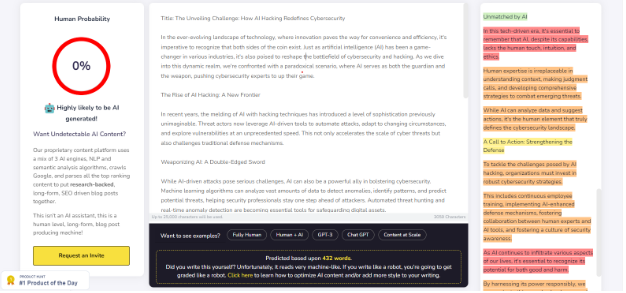

Contestant One: Content at Scale

The first AI detection site we used was Content at Scale. Content at Scale is a detection site that claims to have 98% accuracy. So let’s see what happens when we run our AI written blog through this detector.

On the left we have a human probability section. Luckily, this site marked this blog as having 0% chance of being written by a human, and highly likely to be AI-generated. On the right it gives you a section-by-section analysis of what sounds human and what does not. For most of this fake blog, Content as Scale marked as AI-written.

The results were good when I put my own blog in as well (thank goodness). 100% human probability. There are sections that say my writing is a bit robotic, though it can still tell that it was written by an actual human, and not a computer.

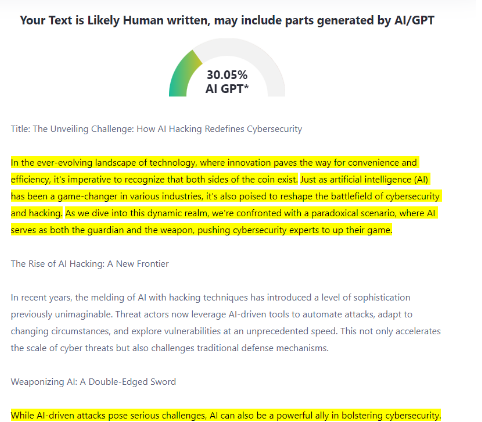

Contestant Two: ZeroGPT

Next up is ZeroGPT, which claims to be “the most Advanced and Reliable Chat GPT, GPT4 and AI Content Detector”.

ZeroGPT is not as accurate as Content at Scale, but not totally wrong either. It was able to detect some AI written parts, though still believes our fake blog to be human written, with 30.05% being AI written. When running my blog through this same site, I got a score about half of this, at 18.63%. When applied to cybersecurity, a site like this would not be very reliable, as it can only recognize certain patterns of AI.

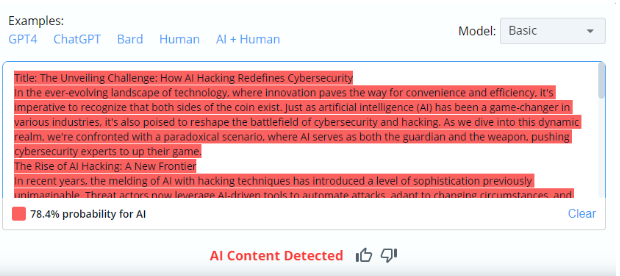

Final Contestant: Copyleaks

The final site used is Copyleaks. This site does not allow me to paste the entire AI blog, but clearly identifies what I was able to fit as AI.

The entire text is highlighted red, with 78.4% probability for AI, which is a good sign! The human written blog had almost exactly opposite results, 66.3% probability for being written by a human. This would be extremely useful in the cybersecurity world–it highlights the problems it finds clearly and gives an exact percentage of those results.

What About Humans?

After testing our blogs against these sites, we thought, “how well can humans recognize human vs. AI?” So we tested it! We got two people who have never read a single word of my writing, and who are not very familiar with AI or ChatGPT, then asked them to read both blindly, not knowing which was which.

Human 1 read the AI generated blog first, and was able to identify it as such. “I think this because of the way the last paragraph is written. When I’ve used ChatGPT to write stuff the summary has a similar structure as the last paragraph in this one.” Pattern recognition is an important skill that humans have, and it helped this person in identifying the AI blog. They were also able to successfully identify that the human blog was real, since the “article has specific stats and information that I don’t think AI will be able to write up.” However, when I tested whether or not an AI could come up with statistics, I found that it could. Whether they are real or not doesn’t matter in this case, as most readers won’t double check. This is not a foolproof method, but it did work in this case.

Human 2 was also able to guess both correctly, but only after reading through both. In their first run on the human blog, which they read first, they assumed that it was written by ChatGPT. This is that “AI likes to over use commas or grammar to make it all ‘fancy’. The last paragraph has 8 commas.” They used the same reasoning as the other person for why the real blog was human written, that it “has specific stats and information that [they] don’t think AI would be able to write up.”

Results?

The most accurate of the three sites was Content at Scale. It’s amazing that there are sites out there that can detect AI, especially with the threats AI is going to be posing in the near future in the cybersecurity world. Cybersecurity defense is a lot different than differentiating blogs, of course, but this gives a small insight to how well programs can work to detect AI.Also, since hackers are using ChatGPT to generate phishing emails and deep fakes, reliable sites like these can keep you from becoming a victim. It may be beneficial to self-check AI-detection sites yourself before using them to check possible AI-generated phishing emails or other software or bodies of text.

If you want to see the original blog, here is a link to the human blog and the AI blog version is shown below.

ChatGPT’s Blog

Title: The Unveiling Challenge: How AI Hacking Redefines Cybersecurity

In the ever-evolving landscape of technology, where innovation paves the way for convenience and efficiency, it’s imperative to recognize that both sides of the coin exist. Just as artificial intelligence (AI) has been a game-changer in various industries, it’s also poised to reshape the battlefield of cybersecurity and hacking. As we dive into this dynamic realm, we’re confronted with a paradoxical scenario, where AI serves as both the guardian and the weapon, pushing cybersecurity experts to up their game.

The Rise of AI Hacking: A New Frontier

In recent years, the melding of AI with hacking techniques has introduced a level of sophistication previously unimaginable. Threat actors now leverage AI-driven tools to automate attacks, adapt to changing circumstances, and explore vulnerabilities at an unprecedented speed. This not only accelerates the scale of cyber threats but also challenges traditional defense mechanisms.

Weaponizing AI: A Double-Edged Sword

While AI-driven attacks pose serious challenges, AI can also be a powerful ally in bolstering cybersecurity. Machine learning algorithms can analyze vast amounts of data to detect anomalies, identify patterns, and predict potential threats, helping security professionals stay one step ahead of attackers. Automated threat hunting and real-time anomaly detection are becoming essential tools for safeguarding digital assets.

The Cat-and-Mouse Game: The Future of Cybersecurity

The intersection of AI and cybersecurity creates a cat-and-mouse game where hackers and defenders continuously adapt and evolve. As AI becomes more accessible, the barriers to entry for both ethical hackers and malicious actors diminish. This underscores the importance of proactive cybersecurity measures, continuous learning, and staying updated on the latest AI-driven threat vectors.

The Human Factor: Unmatched by AI

In this tech-driven era, it’s essential to remember that AI, despite its capabilities, lacks the human touch, intuition, and ethics. Human expertise is irreplaceable in understanding context, making judgment calls, and developing comprehensive strategies to combat emerging threats. While AI can analyze data and suggest actions, it’s the human element that truly defines the cybersecurity landscape.

A Call to Action: Strengthening the Defense

To tackle the challenges posed by AI hacking, organizations must invest in robust cybersecurity strategies. This includes continuous employee training, implementing AI-enhanced defense mechanisms, fostering collaboration between human experts and AI tools, and fostering a culture of security awareness.

As AI continues to infiltrate various aspects of our lives, it’s essential to recognize its potential for both good and harm. By harnessing its power responsibly, we can navigate this complex landscape and secure our digital future. The key lies in staying vigilant, adaptive, and always prepared to defend against the next wave of AI-driven cyber threats.